Introduction

I recently stumbled upon an interesting article about Kindle Web DRM: How I Reversed Amazon’s Kindle Web Obfuscation Because Their App Sucked

I was surprised (not really) to learn that Kindle books read through a web browser are protected by DRM, which can complicate the reading experience for users who prefer using different devices or applications.

How Kindle Web DRM works

Via the Kindle web reader at read.amazon.com, when the user requests to “start reading”, the web client issues an API call which returns a .tar file containing the book data:

page_data_0_4.jsonglyphs.json(SVG definitions for each glyph/character)toc.jsonmetadata.jsonlocation_map.json

The glyph ID trick

The “text” is not provided as normal Unicode text but as sequences of glyph IDs (numbers) referencing the glyphs in glyphs.json.

These aren’t letters. They’re glyph IDs. Character ‘T’ isn’t Unicode 84, it’s glyph 24.

This is where the DRM comes in: instead of encrypting the text directly, Amazon uses this indirection layer with glyph IDs to make it harder to extract the actual text content.

Further, I learned that Amazon randomizes the mapping between glyph IDs and actual letters for every request/batch, typically every ~5 pages. You cannot reuse a single mapping table across the entire book. The mapping changes: glyph ID 24 might mean ‘T’ in one request, then later it might mean something completely different in the next chunk.

The original solution

The “working” solution the author used involved:

- Rendering each glyph SVG into a raster image

- Computing a perceptual hash (SSIM) of the rendered glyph

- Matching each glyph image against a rendered reference font set for all characters (A-Z, a-z, 0-9, punctuation, ligatures)

This yields a mapping from glyph ID to character. Since the SVG paths are stable (though the glyph IDs change), the rendered shape is constant across requests, so the hash technique works. Then the full text, paragraphs, bold/italic styling, and alignment could be reconstructed.

Investigating the Web Reader

I decided to investigate this myself. I “purchased” a free Kindle book, opened it in the web reader, and started inspecting the network requests.

Initial request

As described, when opening the book, a request is made to:

curl 'https://read.amazon.com/service/mobile/reader/startReading?asin=B078S55Z34&clientVersion=20000100' \

-H 'accept: */*' \

-b [TRUNCATED] \

-H 'device-memory: 8' \

-H 'dnt: 1' \

-H 'downlink: 10' \

-H 'x-adp-session-token: {enc:}{iv:}{name:}{serial:}'

This returns a JSON response

{

"YJFormatVersion": "CR!6CEHGNG2G53N95AK5X3MBNVMJWAP",

"clippingLimit": 15,

"contentChecksum": null,

"contentType": "EBOK",

"contentVersion": "70fe460d",

"deliveredAsin": "B078S55Z34",

"downloadRestrictionReason": null,

"expirationDate": null,

"format": "YJ",

"formatVersion": "CR!BD7FM2TJZX34VA1K9JK7S127CJSF",

"fragmentMapUrl": null,

"hasAnnotations": false,

"isOwned": true,

"isSample": false,

"karamelToken": {

"expiresAt": 1760889436363,

"token": "[TRUNCATED]"

},

"kindleSessionId": "",

"lastPageReadData": {

"deviceName": "Kindle Cloud Reader",

"position": 439053,

"syncTime": 1760887101000

},

"manifestUrl": null,

"metadataUrl": "https://k4wyjmetadata.s3.amazonaws.com/books2/B078S55Z34/70fe460d/CR%21BD7FM2TJZX34VA1K9JK7S127CJSF/book/YJmetadata.jsonp?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Date=20251019T155216Z&X-Amz-SignedHeaders=host&X-Amz-Expires=600&X-Amz-Credential=[TRUNCATED]&X-Amz-Signature=c4bff25b2528bbf071a4b64bd593455a5828f02d4299e8b28923dfa0635b6320",

"originType": "Purchase",

"pageNumberUrl": null,

"requestedAsin": "B078S55Z34",

"srl": 2818,

"verticalLayout": false

}

Token-Based authentication

The karamelToken token is then passed in the x-amz-rendering-token header of subsequent requests to fetch the actual book content.

There backend is probably using Karamel:

Karamel is a management tool for reproducibly deploying and provisioning distributed applications on bare-metal, cloud or multi-cloud environments. Karamel provides explicit support for reproducible experiments for distributed systems. Source: Karamel Documentation

Metadata request

The metadataUrl provides a URL to fetch the book metadata, which returns a JSONP response like this:

loadMetadata({

"ACR": "CR!BD7FM2TJZX34VA1K9JK7S127CJSF",

"asin": "B078S55Z34",

"authorList": ["Kovach, Carla"],

"bookSize": "576632",

"bookType": "mobi7",

"cover": "images/cover.jpg",

// ... additional metadata

"title": "The Next Girl: A gripping crime thriller...",

"version": "70fe460d",

"startPosition": 5,

"endPosition": 497150

});

Rendering request

Finally, the renderer/render request is made to fetch the actual book content:

curl 'https://read.amazon.com/renderer/render?version=3.0&asin=B078S55Z34&contentType=FullBook&revision=70fe460d&fontFamily=Bookerly&fontSize=8.91&lineHeight=1.4&dpi=160&height=998&width=1398&marginBottom=0&marginLeft=9&marginRight=9&marginTop=0&maxNumberColumns=2&theme=dark&locationMap=true&packageType=TAR&encryptionVersion=NONE&numPage=6&skipPageCount=0&startingPosition=2820&bundleImages=false' \

-b [TRUNCATED] \

-H 'device-memory: 8' \

-H 'dnt: 1' \

-H 'downlink: 10' \

-H 'x-amz-rendering-token: [karamelToken obtained from the previous response]' \

TAR archive contents

Once untarred here is the list of files obtained:

$ tar -tf book.tar

layout_data_0_5.json

toc.json

metadata.json

panels_0_5.json

glyphs.json

page_data_0_5.json

tokens_0_5.json

location_map.json

manifest.json

Rate limiting

As described in the article, trying to increase the numPage parameter to get more pages in a single request does not work:

{"message":"Unexpected number of pages"}

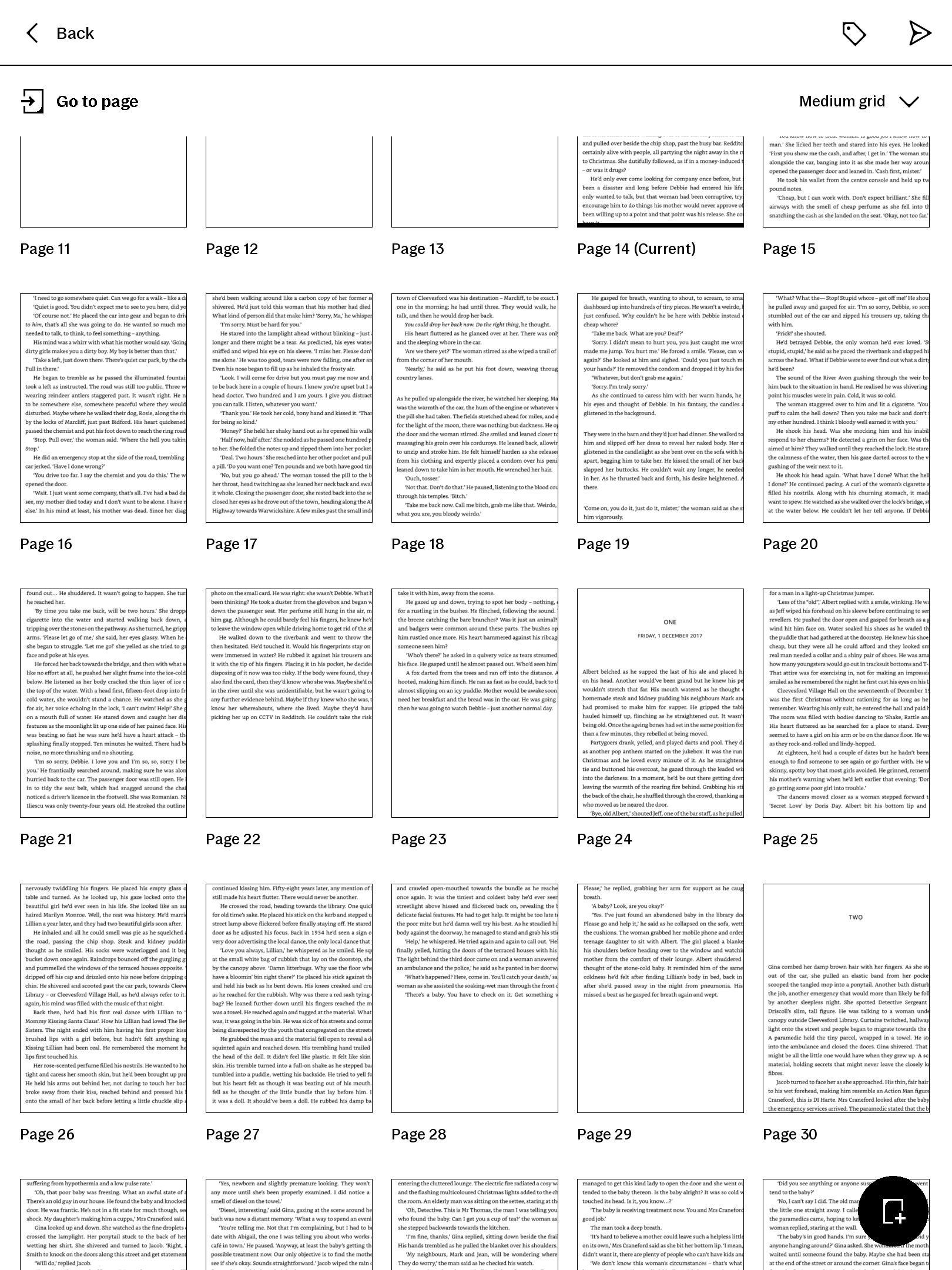

Request-to-Image flow in the Kindle Web Reader

I opened Chrome DevTools and quickly noticed a lot of blob URLs being created when navigating through the book. This hinted that the Kindle Web Reader was rendering pages to <canvas> elements and then converting them to images for display.

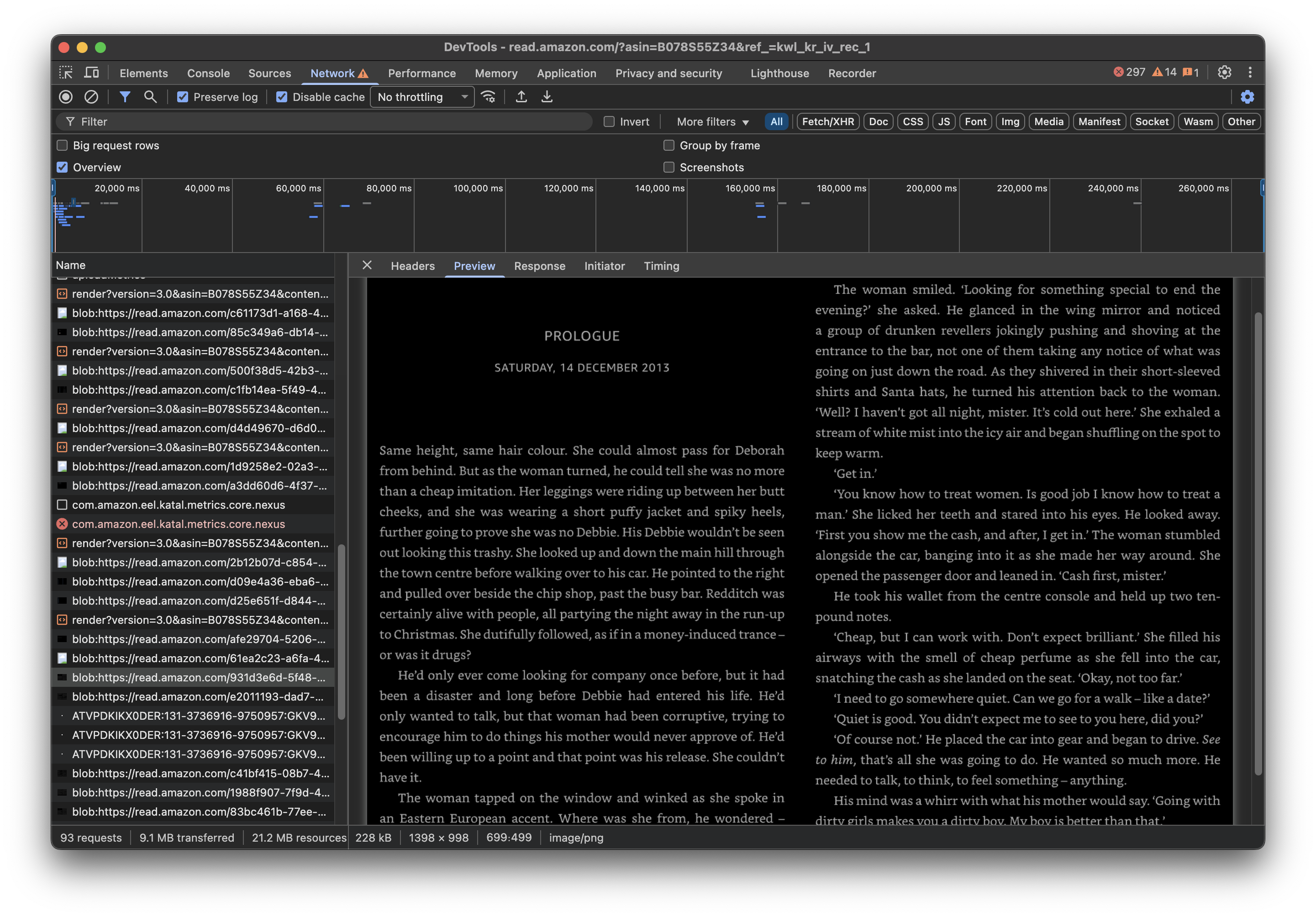

Call stack analysis

I captured the request call stack of a blob while navigating through a book :

Image

blobToImage @ B1LdOhqxr7L.js:98227

canvasToImage @ B1LdOhqxr7L.js:98220

convertCanvasInBackground @ B1LdOhqxr7L.js:101714

renderInMainThread @ B1LdOhqxr7L.js:101672

render @ B1LdOhqxr7L.js:101667

render @ B1LdOhqxr7L.js:112737

setTimeout

renderPages @ B1LdOhqxr7L.js:112603

Understanding the flow

I traced back the flow to understand how the initial request to renderer/render leads to the final image rendering:

1. Navigation layer

The navigation layer (CachingRendererController.getPageByRelativeIndex) orchestrates page loading. When a “next page” action is triggered, it resolves pending renders, selects the correct cache slot, and eventually calls renderBatch. That method prepares a navigation request describing the desired page count, offsets, and whether images should be bundled.

CachingRendererController.renderBatch triggering the fetch:

renderBatch(e, t, i) {

return this.pendingRenderDeferred = new r.B,

new Promise((r, o) => {

setTimeout(() => l(this, void 0, void 0, function*() {

try {

const s = t === n.Direction.Backward ? e + 1 : e,

l = this.createNavigationOptions(

this.navRef.startPositionId,

s,

t,

this.options.readAheadPageCount

);

let c = yield this.options.bookProviderFactory.get(

this.asin,

this.revision,

l,

this.getRenderSettings()

);

if (t === n.Direction.Backward && (c = c.reverse()),

this.renderingProcessId === i) {

const i = this.renderPages(e, c, t, l.pageCount);

r(i);

} else {

o(new a.w8);

}

} catch (e) {

o(e);

} finally {

this.pendingRenderDeferred.resolve();

}

}), 0);

});

}

createNavigationOptions wiring UI state into the request:

createNavigationOptions(e, t, i, n) {

const r = this.navRef.getRelativeSlot(t, i);

return {

pageCount: n,

direction: i,

offset: r?.offset ?? 0,

bundleImages: this.options.bundleImages,

startPositionId: e,

includeLocationMap: this.options.includeLocationMap

};

}

2. Network request

ServiceBookProviderFactory.get turns that navigation request into the network call we observed. Its ServiceDataLoader companion builds the query string, matching every parameter in the captured curl URL (fontFamily, fontSize, lineHeight, dimensions, theme, packageType=TAR, pagination hints, etc.), and forwards it to DefaultKaramelClient.renderBook. The fetch attaches the short-lived x-amz-rendering-token header before hitting https://read.amazon.com/renderer/render. On success the response body is cached along with the refreshed token pulled from the karamel-rendering-token header.

ServiceBookProviderFactory.get delegating to the data loader:

get(e, t, i, n) {

return o(this, void 0, void 0, function*() {

const r = new s.ServiceDataLoader(

this.metricsUtil,

this.options,

this.packageType,

e,

t,

i,

n,

this.client

);

return yield r.load();

});

}

ServiceDataLoader fetch and TAR load:

const c = {

version: l.version ?? o.g.VERSION,

asin: i.asin,

contentType: i.contentType,

revision: i.revision,

fontFamily: l.fontFamily,

fontSize: String(l.fontSize),

lineHeight: String(l.lineHeight),

dpi: String(l.dpi),

height: String(l.pageHeight),

width: String(l.pageWidth),

marginBottom: String(l.marginBottom),

marginLeft: String(l.marginLeft),

marginRight: String(l.marginRight),

marginTop: String(l.marginTop),

maxNumberColumns: l.maxNumberColumns

? String(l.maxNumberColumns)

: o.g.DEFAULT_MAX_COLUMN,

theme: String(l.theme ?? "custom").toLowerCase(),

locationMap: String(l.includeLocationMap),

packageType: this.packageType,

encryptionVersion: "NONE"

};

const h = yield this.metricsUtil.asyncExecuteMetrics(

"RequestKaramelData",

() => this.fetch(c)

);

const u = yield this.metricsUtil.asyncExecuteMetrics(

"GetKaramelData",

() => h.clone().arrayBuffer()

);

const d = h.headers.get("karamel-rendering-token");

const p = d != null ? JSON.parse(d) : void 0;

this.packageDataLoader = new a.t(

new s.J(u),

this.metricsUtil,

this.options.imageDecipher,

p

);

const f = yield this.packageDataLoader.getManifest();

if (f.cdn && f.cdnResources) {

this.cdnDataLoader.addImages(f.cdn, f.cdnResources, p);

}

return this.packageDataLoader;

3. TAR processing

The payload is a TAR archive. ServiceDataLoader.load pipes the response into TarDataLoader, which indexes every entry (page_*.json, layout_data_*.json, img_*, tokens_*, etc.) and asynchronously populates ServiceBookProviderAdapter. Repeated calls (images, tokens, metadata) reuse the same in-memory package.

TarDataLoader constructor sweeping the archive entries:

constructor(e, t, i, n) {

this.reader = e;

this.metricsUtil = t;

this.imageDecipher = i;

this.karamelToken = n;

this.pages = new Map;

this.layouts = new Map;

this.layoutPages = new Map;

this.images = new Map;

this.tokens = new Map;

this.panels = new Map;

this.loadingPromise = new Promise((e, t) => {

try {

this.reader.init();

const i = [];

this.reader.getEntries().forEach(e => {

const t = e.name;

t.startsWith("img_") || t.startsWith(o.ASSETS_DIR) ||

t.startsWith("rsrc") || t.endsWith(".png")

? i.push(this.addImage(e))

: t.startsWith("page_")

? i.push(this.addPage(e))

: t.startsWith("layout_data_")

? i.push(this.addLayoutPage(e))

: t.startsWith("tokens_")

? i.push(this.addTokens(e))

: t.startsWith("panels_")

? i.push(this.addPanels(e))

: "layout.json" === t

? i.push(this.addLayout(e))

: "glyphs.json" === t

? i.push(this.addGlyphs(e))

: "toc.json" === t

? i.push(this.addTableOfContent(e))

: "metadata.json" === t

? i.push(this.addMetadata(e))

: "manifest.json" === t

? i.push(this.addManifest(e))

: "location_map.json" === t &&

i.push(this.addLocationMap(e));

});

Promise.all(i).then(e).catch(e => t(e));

} catch (e) {

t(e);

}

});

}

4. Canvas rendering

Rendering is handled by PageImageRenderer.render. It acquires a pooled CanvasRenderingContext2D, invokes the page renderer to draw text, glyphs, and decorations, and then wraps the canvas in a DOM container.

PageImageRenderer.render delegating to the background conversion:

render(e, t) {

return o(this, void 0, void 0, function*() {

const i = this.contextPool.acquire();

try {

yield this.pageRenderer.paintPage(i, e, t);

const n = this.mountPageCanvas

? this.mountCanvas(i.canvas)

: this.instantImage(i.canvas, t.pageWidth);

this.convertCanvasInBackground(i, t.pageHeight);

return n;

} catch (e) {

this.contextPool.release(i);

throw e;

}

});

}

5. Canvas to image conversion

The conversion path in the stack trace comes from convertCanvasInBackground. A setTimeout calls canvasToImage, which first exports the canvas via canvas.toBlob (canvasToBlob), builds a temporary blob: URL, and feeds it into blobToImage. Once HTMLImageElement.decode() resolves, the code swaps the <canvas> for the <img>, releases the drawing context back to the pool, and the image remains in the flow.

convertCanvasInBackground(e, t) {

setTimeout(() => o(this, void 0, void 0, function*() {

try {

const i = yield n.a.canvasToImage(

e.canvas,

this.options.pageWidth,

t

);

try {

yield i.decode();

} catch (e) {

console.error("image cannot be decoded", e);

}

e.canvas.replaceWith(i);

} catch (e) {

console.error(

"An error occurred when converting the canvas into image",

e

);

} finally {

this.contextPool.release(e);

}

}));

}

static canvasToBlob(e) {

const t = e;

if (t.toBlob) {

return new Promise(t => {

e.toBlob(e => {

Object(r.b)(e);

t(e);

});

});

}

if (t.convertToBlob) {

return e.convertToBlob();

}

throw Error("canvasToBlob: canvas cannot be converted to a Blob");

}

static canvasToImage(e, t, i) {

return o(this, void 0, void 0, function*() {

const n = yield a.canvasToBlob(e),

r = URL.createObjectURL(n);

return this.blobToImage(r, t, i).finally(() => URL.revokeObjectURL(r));

});

}

static blobToImage(e, t, i) {

return o(this, void 0, void 0, function*() {

return new Promise((n, r) => {

const o = new Image();

o.src = e;

o.style.objectFit = "cover";

o.style.objectPosition = "top";

void 0 !== t && (o.style.width = t + "px");

void 0 !== i && (o.style.height = i + "px");

o.onload = () => {

n(o);

};

o.onerror = e => {

r(e);

};

return o;

});

});

}

So the full call chain is convertCanvasInBackground -> canvasToImage -> canvasToBlob -> blobToImage.

Capturing the rendered images

Instead of going through the complex DRM-protected text extraction process, one could simply capture the rendered images from the Kindle Web Reader (and use OCR to extract the text content). This approach would bypass the glyph ID mapping and randomization issues entirely.

That’s what I ended up doing.

Modified canvasToImage function

static downloadCounter = 1;

static async canvasToImage(canvas, width, height, opts = {}) {

// Optional override

width = 1920;

height = 1174;

// Prepare scaled canvas for download

let scaledCanvas = canvas;

if (width && height) {

const tmp = document.createElement("canvas");

tmp.width = width;

tmp.height = height;

const ctx = tmp.getContext("2d");

ctx.drawImage(canvas, 0, 0, width, height);

scaledCanvas = tmp;

}

// Export scaled blob for download

const blobScaled = await this.canvasToBlob(scaledCanvas);

// Auto-download scaled image

const index = this.downloadCounter++;

const baseName = opts.baseName || "export";

const extension = opts.extension || "png";

const filename = `${baseName}_${String(index).padStart(3, "0")}.${extension}`;

const link = document.createElement("a");

link.href = URL.createObjectURL(blobScaled);

link.download = filename;

document.body.appendChild(link);

link.click();

link.remove();

setTimeout(() => {

URL.revokeObjectURL(link.href);

}, 2000);

const blobOriginal = await this.canvasToBlob(canvas);

const objectUrl = URL.createObjectURL(blobOriginal);

try {

const img = await this.blobToImage(objectUrl);

return img;

} catch (err) {

throw err;

} finally {

URL.revokeObjectURL(objectUrl);

}

}

This modified canvasToImage function automatically downloads each rendered page as a PNG file.

The next step is editing the download location in the browser settings to point to a dedicated folder, and toggling “Ask where to save each file before downloading” to off. Then reload the Kindle Web Reader, navigate through the book, and each page will be saved as an image file automatically.

Automating page navigation

To automate the navigation through the book, I ran this snippet in the browser console. It simulates pressing the “Right Arrow” key every 1 second, effectively turning the pages automatically:

setInterval(() => {

const event = new KeyboardEvent('keydown', {

key: 'ArrowRight',

code: 'ArrowRight',

keyCode: 39,

which: 39,

bubbles: true

});

document.dispatchEvent(event);

}, 1000);

Converting to PDF

Finally, I used ImageMagick to convert the images to a PDF:

magick convert export_*.png output.pdf

And because the browser displayer two pages at a time, I splitted every two pages into separate images before converting to PDF:

for f in export_*.png; do

magick "$f" -crop 2x1@ +repage "${f%.png}_slice_%d.png"

done

And for the cover page:

magick export_001.png -crop 960x1174+480+0 export_001_slice_0.png

rm export_001_slice_1.png

The rendered images can then be processed with OCR tools if text extraction is needed, or simply combined into a PDF for offline reading.