The Problem: Too Much Copy-Pasting

I was studying for my French math exams and constantly found myself copying exercises from Bibmath.net into LaTeX documents. The site has tons of quality exercises with solutions, but manually formatting them was getting annoying. Copy, paste, fix formatting, add LaTeX commands, repeat. There had to be a better way.

So I built a scraper that does all of this automatically. Nothing fancy, just a Python script that grabs exercises and spits out proper LaTeX files ready for compilation.

Web Scraping French Math Content

The first challenge was understanding Bibmath’s HTML structure. Each exercise page has a specific layout with exercises, hints (“indication”), and solutions (“corrigé”). Here’s what the scraper does:

def fetch_chapitre(page):

headers = {

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)...',

# ... more headers to avoid getting blocked

}

response = requests.get(page, headers=headers)

soup = BeautifulSoup(response.text, 'lxml')

article = soup.find('article', id='contenugauche')

# Extract chapter title and create structure

title = article.find('h1').get_text(strip=True)

chapitre = Chapitre(title, url=response.url)

# Process each section and exercise

for element in article.find_all(recursive=False):

if 'titrepartie' in element.get('class', []):

# New section found

part_title = element.get_text(strip=True)

current_part = Part(part_title)

chapitre.add_part(current_part)

elif 'exo' in element.get('class', []):

# Exercise found - extract all the data

exercise = extract_exercise_data(element)

current_part.add_exercise(exercise)

Converting HTML Math to LaTeX

The trickiest part was handling the mathematical content. Bibmath uses a mix of HTML formatting and special characters that needed to be converted to proper LaTeX:

def parse(content):

soup2 = BeautifulSoup(str(content), 'lxml')

# Remove unwanted tags but keep the content

for tag in soup2.find_all(['span', 'a', 'img']):

tag.extract()

# Handle numbered lists specially

questions = []

ol_list = soup2.find('ol', class_='enumeratechiffre')

if ol_list:

for i, li in enumerate(ol_list.find_all('li'), 1):

if i == 1:

questions.append("\\par") # LaTeX paragraph break

questions.append(f"{i}. {li.get_text().strip()}")

list_text = "\n\n".join(questions)

ol_list.replace_with(list_text)

return soup2.get_text().strip()

This handles the common case where exercises have multiple sub-questions in numbered lists. The output looks clean in LaTeX.

LaTeX File Generation

Once I had the exercise data parsed, generating the LaTeX was straightforward. I created a template system with header and footer files:

class LatexFile:

def generate_latex(self, chapitre):

self.add_header() # Standard LaTeX packages and setup

# Add source attribution

self.add_source(chapitre)

# Generate exercises section

self.add_content(f"\\title{{{chapitre.title}}}")

for part in chapitre.parts:

self.add_content(f"\\section{{{part.title}}}")

for ex in part.exercises:

self.add_exercise(ex)

# Separate sections for hints and solutions

self.add_pagebreak()

for part in chapitre.parts:

for ex in part.exercises:

self.add_indication(ex)

self.add_pagebreak()

for part in chapitre.parts:

for ex in part.exercises:

self.add_answer(ex)

self.add_footer()

self.save()

Each exercise gets formatted with custom LaTeX commands that I defined in the header:

\exercice{12345, name, date, 3, Étude de fonction}

\enonce{12345}{}

Soit f la fonction définie sur R par f(x) = x² + 2x - 3.

1. Montrer que f est continue sur R.

2. Déterminer les limites de f en ±∞.

\finenonce{12345}

\finexercice

Batch Processing and PDF Generation

To handle multiple chapters, I built a simple batch system. The script reads URLs from a text file and processes them all:

if __name__ == "__main__":

with open('pages.txt', 'r') as f:

for line in f:

page = line.strip()

get_page(page)

Running the scraper looks like this:

$ python grab.py

LaTeX file generated: dump/Nombres complexes.tex

LaTeX file generated: dump/Polynômes.tex

LaTeX file generated: dump/Fractions rationnelles.tex

LaTeX file generated: dump/Espaces vectoriels.tex

...

Then a bash script compiles everything to PDF:

#!/bin/bash

for tex_file in "dump"/*.tex; do

filename=$(basename "$tex_file")

echo "Processing $filename..."

pdflatex -output-directory="output" "$tex_file"

if [ $? -eq 0 ]; then

echo "Successfully generated PDF for $filename"

else

echo "Error generating PDF for $filename"

fi

done

Console output during compilation:

$ ./gen.sh

Processing Nombres complexes.tex...

This is pdfTeX, Version 3...

...

Output written on output/Nombres complexes.pdf (47 pages, 892341 bytes).

Successfully generated PDF for Nombres complexes.tex

Processing Polynômes.tex...

...

Successfully generated PDF for Polynômes.tex

What I Ended Up With

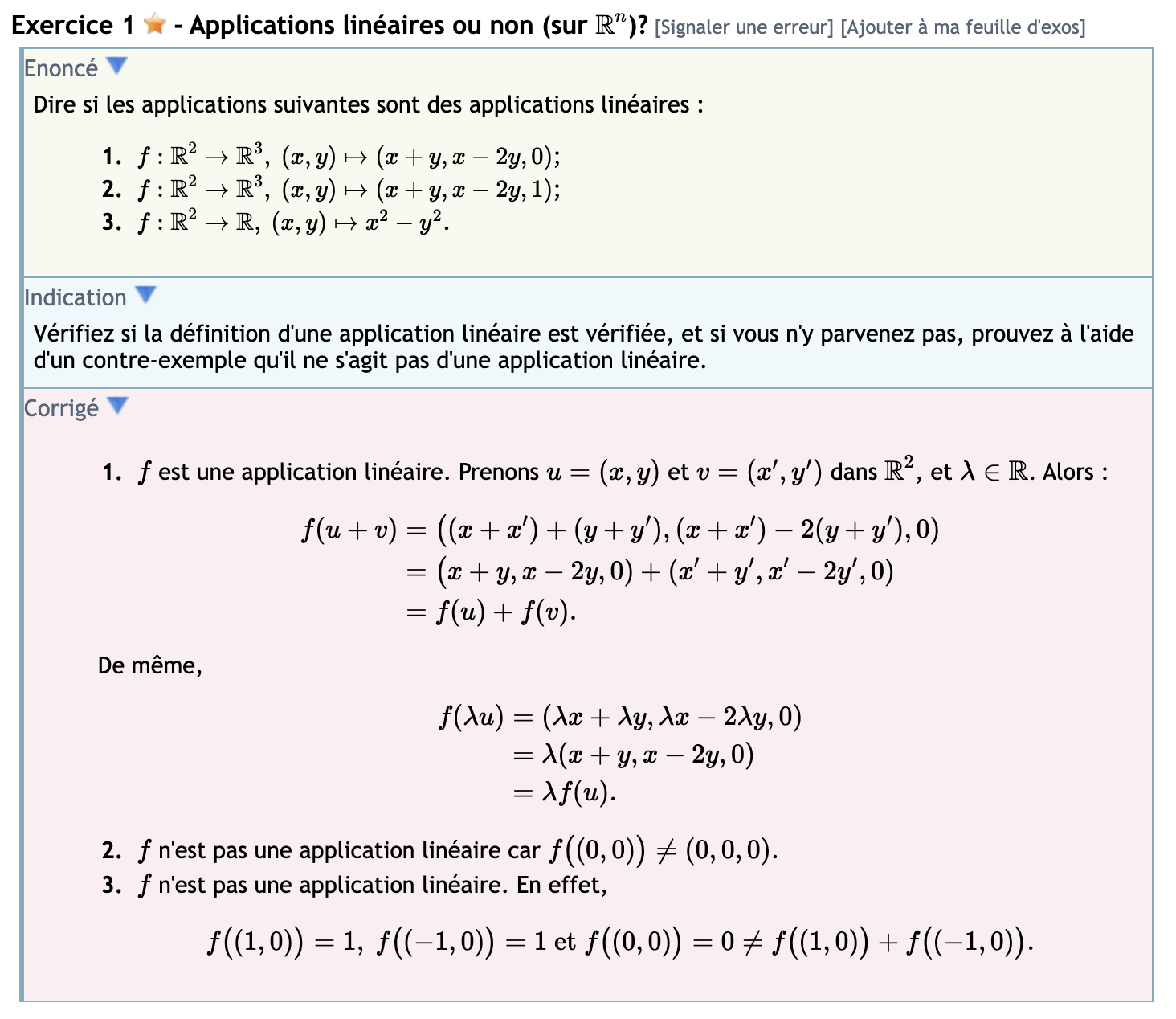

The final system gives me clean PDF exercise sheets organized by chapter and topic. Each PDF has:

- Exercises section: All problems clearly formatted with difficulty stars

- Hints section: Separated so I can try problems first

- Solutions section: Complete worked solutions

The PDFs look professional and are much easier to print and study from than the website. I can now grab any Bibmath chapter and have a LaTeX version in seconds.

Here’s the typical file structure after running everything:

dump/

Nombres complexes.tex

Polynômes.tex

Espaces vectoriels.tex

...

output/

Nombres complexes.pdf

Polynômes.pdf

Espaces vectoriels.pdf

...

If You Want to Try It

The code is straightforward Python with BeautifulSoup and requests. You’ll need a pages.txt file with Bibmath URLs (one per line), plus header/footer LaTeX files for styling.

It’s specifically built for Bibmath’s HTML structure, so it won’t work on other sites without modification. But if you’re studying French math and want better-formatted exercises, it might save you some time.